Importing custom tools into your Unity project can be a real hassle. While some assets are available through the Unity Asset Store and can be imported easily via the integrated Package Manager, many tools – especially open-source ones or custom utilities, are distributed as UPM packages on GitHub. These typically require manually copying and pasting Git URLs into the Package Manager. Not exactly a smooth experience.

As someone who frequently searches for the perfect tool to solve specific problems, I often find myself digging through forums, blog posts and GitHub repositories. Once I have found something useful, I then need to maintain my own list of repositories – just to keep track. But even with that list, there is no guarantee these tools will play nicely together or work with my current Unity version, especially once support slows down or stops.

The distribution system was originally developed for the MegaPint project and will be explained using it as the primary example. If you are not yet familiar with MegaPint, feel free to check out one of my previous posts where I introduced the project in more detail.

Current Workflow

To better understand the motivation behind the system I built, it helps to look at the current workflow required to import a single UPM package into a Unity project. Right now if you want to use a tool distributed via GitHub as an UPM package, you first need to find the repository – usually through search engines, forums or a personal list of saved link. Then you return to Unity, open the Package Manager and use the “Add package from Git URL” option to paste the link. After waiting for the package to load and import, you are finally able to use the tool.

While this might sound straightforward at first glance, it quickly becomes tedious when you are dealing with multiple tools across multiple projects. It breaks your flow, pulls you out of the Unity Editor and relies on you managing a list of Git URLs somewhere outside the engine – whether in bookmarks, notes or memory.

The Ideal Experience

What I wanted instead was something much closer to the Asset Store experience. When using the Asset Store, all your purchased packages appear directly inside Unity’s Package Manager. You click one button and the tool is added to your project – no switching context, no URL hunting and no guessing work.

So Why Not Just Use the Asset Store?

It is a fair question. The Asset Store offers visibility, convenience and even version compatibility checking. But for me, there are a few key reasons why I have chosen not to go that route.

1. Marketing Requirements

Publishing on the Asset Store means packaging, branding and promoting your tools. While this is already done to some extend it is not a primary aspect I want to spend my time on. My focus is on development and not advertising.

2. Legal and Commercial Implications

Even free assets come with legal responsibilities when distributing via a commercial platform. Hosting through Unity might raise questions around licensing, support obligations or income reporting – even if you never charge a cent. I prefer to avoid that unnecessary overhead.

3. Platform Independence

As much as I respect Unity, recent business decisions – particularly around monetization – have shaken trust within the community. Hosting tools on GitHub gives me more control, more flexibility and aligns with a broader ecosystem that developers already trust.

By building a system that replicates the ease of the Asset Store while using GitHub as the backend, I get the best of both worlds – a smooth in-Editor experience and a transparent open distribution method. This is what led to the development of the Package Collection – a smarter approach to managing UPM based tools directly inside Unity.

Conceptual Idea

The Package Collection system was designed with three major goals in mind. First – Streamline the Import Process – Make it easier and faster for developers to bring tools into their projects without repetitive manual work. Secondly – Eliminate Dependence on Git URLs – Ideally, fully removing the need for developers to even see or copy Git URLs when importing packages. And lastly – Make Tools Browsable In Engine – Replicating the convenience of the Unity Asset Store by allowing tools to be browsed and managed directly inside the Editor.

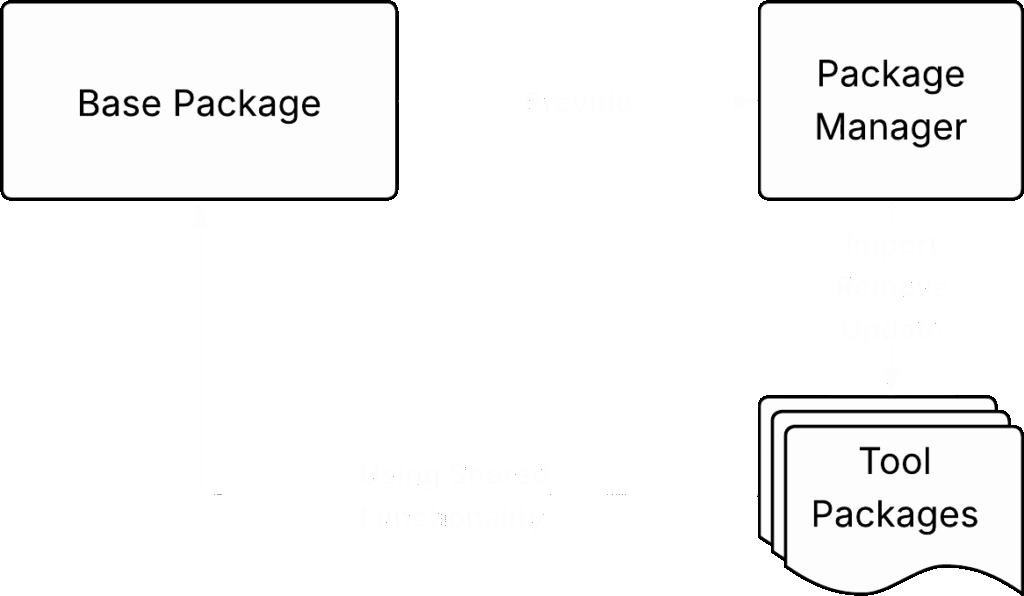

At its core, every tool is distributed as an individual UPM package. But since it is called Package Collection it cannot be a loose bundle of tools. It is a cohesive system. That is where the Base Package of the collection system comes into play. The Base Package is the only component that developers need to import manually using the regular Git URL method. Once imported, it acts as the heart of the entire collection and enables the feature that truly elevates this system.

Inspired by Unity’s own Package Manager, the Base Package includes a fully integrated internal Package Manager or Tool Browser. It provides a searchable registry of available tools, a one click install and uninstall functionality and access to descriptions, dependencies, version compatibilities and even samples. And most importantly – it does this without requiring developers to leave the Unity Editor or track down any external Git URLs. All Git repository links for the tools are stored internally within the Base Package alongside the other metadata. This keeps the system centralized and developer friendly.

Unlike monolithic toolkits that bundle everything into a single bloated package, the Package Collection concept keeps tools lean and modular. Developers only install what they need. And because all tools share access to the Base Package, common utilities and functionality (like logging, version checks or UI elements) are implemented once – then reused across the entire collection. This avoids duplication, reduces package size and minimizes maintenance overhead. It also ensures consistency across all tools in the collection.

Technical Implementation

Since my own implementation of this system is published within the MegaPint Collection and all the code is licenced under Apache 2.0 you can feel free to have an in depth look at how I managed to write the system. Therefor I will only talk about the basics and some notable technical implementations in this part of the article.

Basics

Creating the bare minimum of a custom Unity package import system is surprisingly straightforward. Unity alrerady provides a well-documented API for handling UPM operations via code. At its core, all you need are the following methods from the UnityEditor.PackageManager.Client class.

Client.Add()– to import a package using a Git URLClient.Remove()– to uninstall a package using its nameClient.Embed()– to embed an external package into your project for customization

With just these three functions, you can build a basic importer that supports full Git URLs. You can also fine tune imports by targeting specific branches, tags or commits. For example, to import a specific tag, you can simply append #<tag-name> to the end of your Git URL (e.g., https://github.com/your/tool.git#v1.0.0). This core functionality works without any third party dependencies or external system calls – just native Unity capabilities.

However, building a truly user friendly experience requires a bit more effort. For example, if you want to show users which tools are already installed, including their version you need to read Unity’s internal package registry. Parsing this information takes time, especially on large projects or initial load. To avoid delays and improve responsiveness, it is highly recommended tp implement a caching system. This can be easily achieved by using Unity’s [InitializeOnLoad] attribute. This attribute allows you to run initialization code automatically every time Unity reloads its domain (e.g., after scripts compile). You can use it to pre load or cache registry data, track installed packages and update version info.

Establishing Communication between Packages

One of the key architectural principles of the distribution system is that each tool remains an individual UPM package. This promotes modularity, maintainability and reusability. However, if you want to avoid exposing all your internal code (which is generally discouraged but sometimes necessary), you will naturally start using Assembly Definition Files. In Unity, an Assembly Definition File allows you to define a custom assembly for a group of scripts. Instead of compiling all scripts in the default Assembly-CSharp, Unity will create separate DLLs for each defined assembly. This improves compilation times and enables better encapsulation of your code – only the assemblies you explicitly expose can be accessed from outside.

In this package collection system, two major goals clash:

The Base Package needs to reference the individual tool packages to display and manage them.

Each tool package might want to rely on shared utility functions provided by the Base Package.

This can lead to circular dependencies – something Unity’s assembly system does not allow.

To enable such effective communication between the modular tool packages while avoiding dependency issues, each tool in the system is structured using two assemblies. The first is the internal assembly, which contains the core functionality of the tool. This assembly is designed to be entirely self-contained, meaning it does not depend on any other scripts or packages outside of its own structure. Keeping this part isolated ensures that each tool remains cleanly modular and independent. The second assembly, however handles editor specific logic thath may need to interact with other tools or shared infrastructure. This assembly is not defined within the tool package itself – it is centralized in the Base Package. In my case under the shared name MegaPint.Editor. Any scripts that need to communicate across packages or interact with the distribution system must be part of this shared editor assembly.

But this raises a natural question: how can scripts from one package be compiled into an assembly that is technically defined in another? The answer lies in Unity’s Assembly Definition Reference Files. These work like symbolic links to existing assemblies. Rather than creating a new assembly, a reference file simply tells Unity to treat that containing scripts as part of a pre-defined assembly – based on the name of the assembly not its internal ID or GUID.

This means you can define your main shared editor assembly once in the base package and then place lightweight reference files inside each tool package to connect their scripts to it. It works in reverse as well. If the Base Package needs access to internal functionality of a tool, you can similarly create reference files pointing from the Base Package to the tool’s internal assembly. These references remain valid even if the target assembly has not been imported yet, making the system remarkably flexible.

Supporting Versioning in the Distribution System

A key requirement for a reliable distribution system is that it supports versioning. Tool development is rarely a one-time effort. Tools evolve over time and developers need a way to update them. in the case of the MegaPint collection, each version of the Base Package defines a specific set of compatible versions for all included tool packages. These associations are stored in a structured Package Manager data file, which includes not only Git URLs of each tool but also the exact version tags that should be used when importing them. The versioning mechanism relies on Git tags, which are applied to the release commits of each tool. By appending the correct version tag to a Git URL, Unity’s built-in UPM system can fetch the precise version defined in the data file. This enables reproducible environments – importing a specific version of the Base Package ensures that all related tools will match the expected version.

In addition to installation, this versioning system also allows for update detection. If a tool is installed at a version that differs from the one defined in the currently loaded data file, the system can notify the user and offer an update to align it with the collection’s recommended state.

Things become a bit more tricky when checking for updates on the Base Package itself. Since the Base Package acts as the root of the system and there is no higher level manager overseeing it, version checking must be handled differently. Fortunately, Git provides the necessary tools to solve this. Because the Base Package is also imported using a version specific tag, its current version can be retrieved directly from Unity’s internal package metadata. From there, a check can be performed against the list of all available tags in the corresponding GitHub repository to see if a newer version exists. To do this, the system can launch a background process and execute Git commands such as git ls-remote --tags <repository-url>. This retrieves all version tags available in the repository. The system then compares these tags against the currently installed version and determines if an update is available.

Importing Multiple Packages At Once

One particularly frustrating issue surfaced during later stages of developing the package distribution system. Some tools within the collection depend on others, so I had implemented a dependency resolution mechanism. The ide was simple – before importing a given package, the system first imports the required dependencies. I had tested this setup thoroughly with my existing tools, which had a single dependency at most and everything worked as expected.

However, trouble began when I introduced a new tool that required multiple dependencies. Suddenly, only the first two packages where ever imported. All remaining packages were silently skipped. This behaviour was both confusing and inconsistent and it took a significant amount of time and deep debugging to understand the root cause.

After investigating Unity’s internal behaviour, I discovered the culprit: domain reloads. When a package is imported Unity scans the project for new or changed scripts. If any are found, it triggers a domain reload, which recompiles all scripts and resets static classes. This is a normal part of Unity’s script lifecycle. But here is the issue, if you try to queue multiple import commands in quick succession – especially for more than two packages – Unity will begin a domain reload after the second package finishes importing, which resets all static data and clears any remaining queued operations. In other words, any dependency import logic stored in static fields or singletons is wiped out by the domain reload before it gets a chance to complete. This resulted in the packages silently failing to import without any obvious errors.

After extensive testing and failed attempts to delay the domain reloads, I found a reliable workaround – manually editing Unity’s manifest file. By writing the required packages directly into the dependencies section of the manifest, I could let Unity itself handle the import process. Unity reloads this file, resolves dependencies internally and sequentially imports each listed package. It also handles recompilation in a way that preserves progress between domain reloads, ensuring that all required packages are eventually imported, regardless of how many are listed.

While this approach works efficiently, it comes with a serious warning. The manifest file is an internal Unity file. Its structure, while currently stable and documented in unofficial sources, is not guaranteed to remain unchanged in future Unity version. If Unity alters its format any automated editing performed by the distribution system could invalidate the file and potentially corrupt the project setup. Because of this risk, this approach is reserved for special cases only – specifically, when more than two packages need to be installed at once and the standard import workflow would otherwise fail due to domain reload conflicts.

Limitations

While this distribution system undoubtedly creates a smoother and more polished workflow for developers importing tools into their projects, it introduces significant shift in responsibility to the tool developer. The convenience experienced by the end user comes at the cost of added complexity for the creator of the tools and the infrastructure supporting them.

First and foremost, the developer is now responsible not only for building the tools themselves but also for constructing and maintaining the entire infrastructure of the distribution system. This includes implementing the internal Package Manager logic, managing Git URLs, maintaining version control across multiple repositories and ensuring that the UI for in Editor browsing, importing and updating tools is both user friendly and performant. Handling caching strategies, monitoring domain reloads and mitigating the performance impact of reading Unity’s package registry also becomes a necessary part of the job.

Another challenge lies in the versioning of packages. While traditional Unity workflows often rely on a single package or small handful dependencies, a collection based system introduces layered compatibility concerns. Each tool must be tagged correctly in Git, with those tags aligned to specific versions of the collection. This process also demands meticulous maintenance of the metadata that describes the collection. With each tool update, the developer must manually modify the metadata file that defines all version numbers, Git URLs, descriptions and dependency relations. Mistakes here can lead to incorrect versions being installed or to unresolved dependencies, both of which can result in broken functionality for the end user.

Because of these limitations, adopting such a distribution system is only recommended if you have a significant number of tools that you plan to maintain and distribute as a cohesive collection. The infrastructure, versioning, dependency management and editor integration introduce a huge amount of overhead that simply does not justify itself for smaller individual tools. In the case of a single utility or a lightweight package, the complexity added by the collection system would likely outweigh any of the benefits it provides.

Further Possible Applications

One further implication of this distribution system – perhaps its most exciting potential -lies in its ability to fundamentally transform how tools are discovered and adopted within the Unity ecosystem. Imagine extending this concept beyond a personal or team based collection. By integrating with public registries like OpenUPM, the system could evolve into a fully fledged in Editor hub for open source tools. Instead of browsing GitHub repositories or saving important URLs, developers could explore and install any publicly listed UPM package from OpenUPM though a polished, searchable interface – right inside the Unity Editor, just like the Asset Store.

Such a system would not just streamline workflows, it could redefine them. It would empower developers of all levels to discover, test and adopt tools effortlessly, without ever leaving their project environment. This approach would bring the open source ecosystem into the hearth of Unity, giving it the same visibility, accessibility and usability that commercial assets currently enjoy. For tool creators, it offers a new way to reach users without the legal and logistical overhead of the Asset Store. And for the Unity community as a whole it sets the stage for a more open, interconnected and productive future.

Thank you for reading – and happy developing!